Preface

If you still want to use it for your projects, please do them with the knowledge that the API might change in next versions, and you might have to keep it updated until the system is stable. If you have any problems with the program, or would like some new features, please make an github issue, we will try to accomodate it if it fits within the scope of the program.

The PDF version of this book (Experimental)

The PDF Book is generated using automated script, so it might have some problems, please refer to the web version of the NADI Book if there are problematic/incomplete contents.

Acknowledgements

Thank you everyone who has been consistently testing this software throughout the development and providing feedbacks. Specially the members of Water System Analysis Lab in University of Cincinnati.

Funding

Grant: #W912HZ-24-2-0049 Investigators: Ray, Patrick 09-30-2024 – 09-29-2025 U.S. Army Corps of Engineers Advanced Software Tools for Network Analysis and Data Integration (NADI) 74263.03 Hold Level:Federal

What is NADI System?

Network Analysis amd Data Integration (NADI) System is a system of programs made to make network based data analysis easier and more accessible.

It consists of multiple tools, that perform two important functions, network detection and network analysis. First part is done throuh the Geographic Information (GIS) Tool, while the second part is done using a Domain Specific Programming Language (DSPL) called NADI Task system.

Why use NADI System?

Hydrologic modeling involves the integration of diverse data to simulate complex (and often poorly understood) hydrological processes. The analysis of complex hydrological processes often requires using domain specific calculations, and the visual representation requires the creation of custom maps and plots. Both of which can be a repetitive and error-prone processes, diverting time from data interpretation and scientific inquiry. Efficient methods are needed to automate these tasks, allowing researchers to focus on higher-level analysis and translation of their findings.

Current solution to that problem is to either use general purpose programming languages like Python, R, Julia, etc., or use domain specific software packages to increase the reliability of the tasks. Domain Specific Programming Languages (DSPLs) like the NADI Task system provides better syntax for domain specific tasks, while also are general purpose enough for users to extend it for their use cases. NADI System is trying to be the software framework that can connect those two by integrating with various softwares and providing a intuitive way to do network based data analysis.

Some example functionality of NADI system includes:

- Detection of upstream/downstream relationships from stream network,

- Network based programming using an extensible custom programming language,

- Interactive plots and reports generation,

- Import/export from/to various GIS data formats, etc.

Network Based Data Analysis

If you have data that are network based, like in case of data related to points in a river. NADI provides a text representation of the network that can be manually created with any text editor, or through NADI GIS tool.

Some domains where the data are network based (directed tree graph) are:

- River networks,

- File/Directory structures,

- Human Resources in a Company,

- Decision Tree / Policy Tree,

- Modeling work with dependencies to component models,

Task System

The Domain Specific Programming Language (DSPL) developed for network analysis in NADI makes network analysis simple and intutive. So, it is easier to understand, interpret and catch mistakes. While the NADI IDE has network visualization tools built in that can help you visualiza the network attributes for visual analysis.

For example, implementing “cumulative sum of streamflow” in nadi:

node<inputsfirst>.cum_sf = node.streamflow + sum(inputs.streamflow);

The trying to do this in Python while making sure input nodes are run before the output. So you might have to write a recursive algorithm like this:

def cum_sf(node):

node.cum_sf = node.streamflow + sum([cum_sf(i) for i in node.inputs()])

return node.cum_sf

cum_sf(network.outlet())

While a common mistake people might make is to write a simple loop like this:

for node in network.nodes():

node.cum_sf = node.streamflow + sum(

[i.streamflow for i in node.inputs()]

)

Which doesn’t make sure input nodes are run before output in this case, and can error out when some variables are not present. NADI provides special syntax for cases where you can make sure variables exist before running something.

Extensibility

NADI has two types of plugin systems, which means users can write their own analysis in any programming language and have it interact with NADI through attributes, or they can write it in rust and have even more direct interaction.

Who this book is for

This book has sections explaining the concepts of the NADI system, its developmental notes, user guide and developer guide.

Hence it can be useful for people who:

- Want to understand the concepts used in NADI,

- Want to use NADI system for their use case,

- Want to develop plugin system for NADI,

- Want to contribute to the NADI system packages, etc.

Although not intended, it might include resources and links to other materials related to Rust concepts, Geographical Information System (GIS) concepts, Hydrology concepts, etc. that people could potentially benefit from.

Please note that, this book assumes you have some knowledge of programming, like control flow, loops, functions, variables, etc. As as well some knowledge of GIS if you are reading network detection + GIS visualization specific functions. Those concepts will not be covered in this book. And if you are reading the developer reference or compiled plugin development, some knowledge of rust is assumed, but this is not necessary for the users of NADI that will only write code in NADI.

How to use this book

You can read this book sequentially to understand the concepts used in the NADI system. And then go through the references sections for a specific use cases you want to get into the details of.

- If you are in a hurry, but this is your first time reading this book, at least read the Core Concepts, then refer to the section you are interested in.

- If you want to know a specific details, click on the search icon at the top left of the book to get the search bar. You can search text there and visit the pages.

- If you want to learn about the QGIS Plugin, NADI GIS, goto “Network Detection (GIS)” section.

- If you want to learn about the Domain Specific Programming Language (DSL), refer to the “Network Analysis (DSL)” section.

- Learn by Example contains some simple examples you can follow to learn the basic syntax of the Task System.

- If you want more detailed examples of the use of Task System, refer to the chapters in “Example Research Problems” section on the sidebar.

- If you want reference for functions used in Task System goto “Plugin Functions”, the Internal Plugins section contains details on the functions that are available with nadi system, while the external plugins are plugins that were loaded while this book was compiled.

If you have suggestions on the formatting, or arrangement of chapters in this book, please make an issue on the GitHub repository for this book.

Code Blocks

The code blocks will have example codes for various languages, most common will be string template, task, python, and rust codes.

String template and task have custom syntax highlights that is intended to make it easier for the reader to understand different semantic blocks.

For task scripts/functions, if relevant to the topic, they might

have Results block following immediately showing the results of the

execution.

For example:

network load_file("./data/mississippi.net")

node[ohio] render("{_NAME:case(title)} River")

Results:

{

ohio = "Ohio River"

}

Task and Rust code block might also include lines that are needed to get the results, but hidden due to being irrelevant to the discussion. In those cases you can use the eye icon on the top right side of the code blocks to make them visible. Similarly use the copy icon to copy the visible code into clipboard.

String Template Syntax Highlight

The syntax highlight here in this book makes it so that any unknown transformers will be marked for easy detection to mistakes.

This shows var = {var:unknown()}, {_var:case(title)}

Besides this, the syntax highlight can help you detect the variables

part (within {}), lisp expression (within =()), or commands

(within $()) in the template.

Note: commands are disabled, so they won’t run during template rendering process. But if you are rendering a template to run as a command, then they will be executed during that process.

How to Cite

The sections below show you a bibliography entry in ASCE format, and BibTeX format that you can copy.

Journal Papers: TODO

The papers are currently still being worked on, and will be added here when they are published.

This book

You can cite the link to this book as follows Make sure to replace Accessed Data by today’s date.

Atreya, G. 2025. “Network Analysis and Data Integration (NADI).” Accessed May 1, 2025. https://nadi-system.github.io/.

@misc{PrefaceNetworkAnalysis,

title = {Network {{Analysis}} and {{Data Integration}} ({{NADI}})},

author = {Atreya, Gaurav},

year = {2025},

url = {https://nadi-system.github.io/},

urldate = {2025-05-02}

}

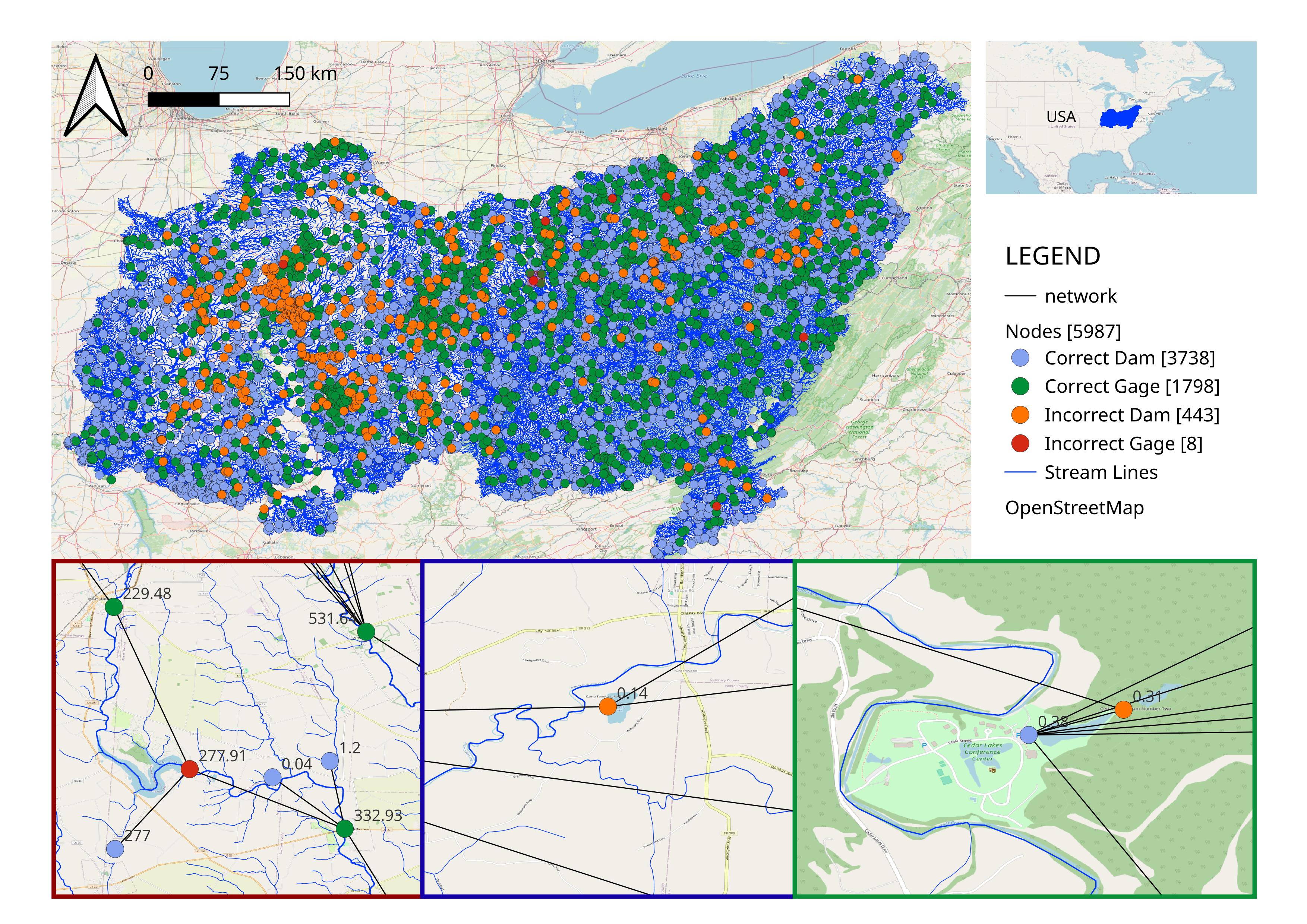

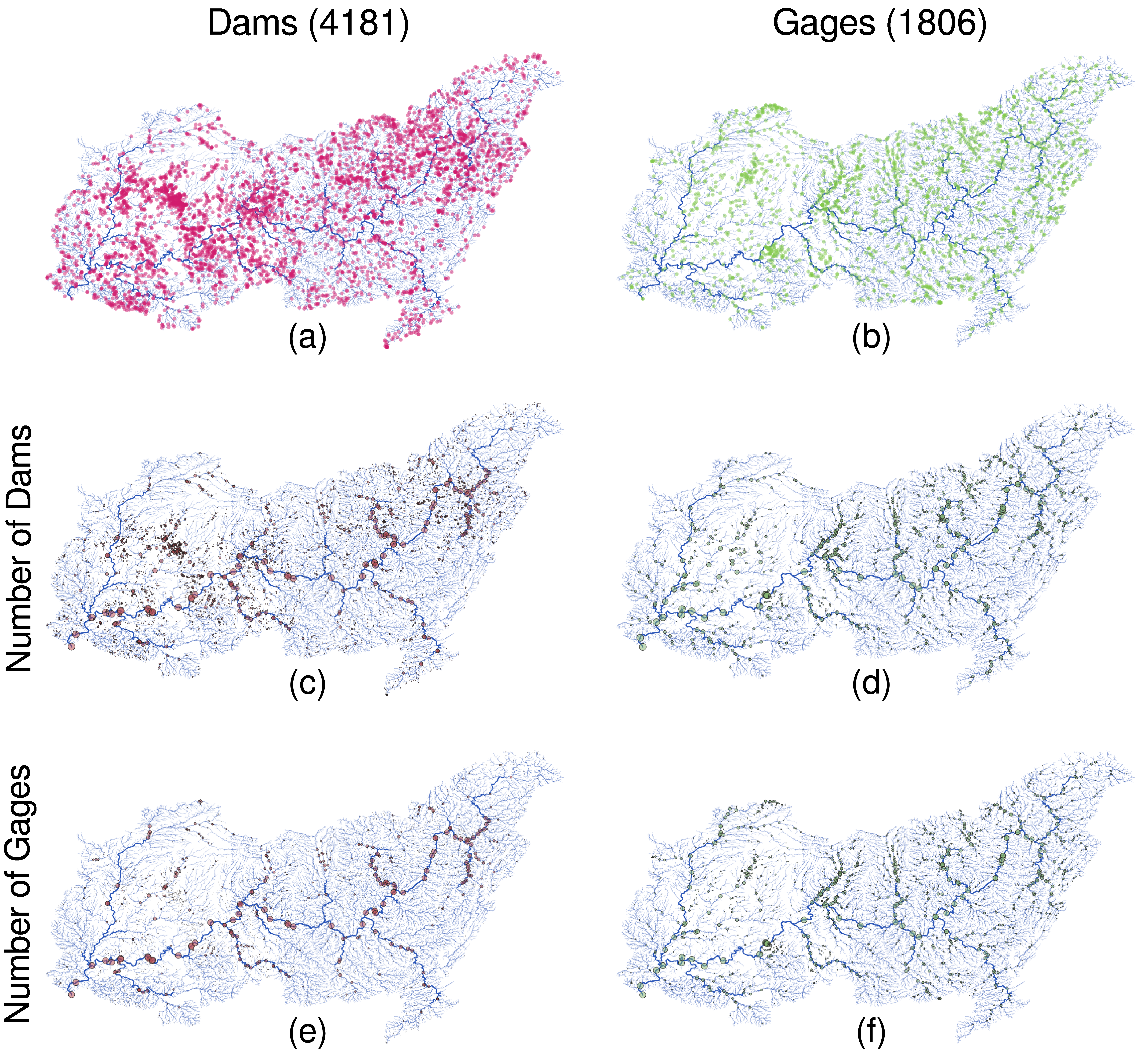

Works using NADI System

Atreya, G., G. Mandavya, and P. Ray. 2024. “Which came first? Streamgages or Dams: Diving into the History of Unaltered River Flow Data with a Novel Analytical tool.” H51L-0865.

@inproceedings{atreyaWhichCameFirst2024,

title = {Which Came First? {{Streamgages}} or {{Dams}}: {{Diving}} into the {{History}} of {{Unaltered River Flow Data}} with a {{Novel Analytical}} Tool},

shorttitle = {Which Came First?},

booktitle = {{{AGU Fall Meeting Abstracts}}},

author = {Atreya, Gaurav and Mandavya, Garima and Ray, Patrick},

year = {2024},

month = dec,

volume = {2024},

pages = {H51L-0865},

urldate = {2025-06-03},

annotation = {ADS Bibcode: 2024AGUFMH51L.0865A}

}

Network Analysis and Data Integration (NADI)

NADI is group of software packages that facilitate network analysis and do data analysis on data related to network/nodes.

NADI System consists of:

| Tool | Description |

|---|---|

| NADI GIS | Geographic Information (GIS) Tool for Network Detection |

| NADI Task System | Domain Specific Programming Language |

| NADI Plugins | Plugins that provide the functions in Task System |

| NADI library | Rust and Python library to use in your programs |

| NADI CLI | Command Line Interface to run NADI Tasks |

| NADI IDE | Integrated Development Environment to write/ run NADI Tasks |

The github repositories consisting of source codes:

| Repo | Tool |

|---|---|

| nadi-gis | Nadi GIS |

| nadi-system | Nadi CLI/ IDE/ Core |

| nadi-plugins-rust | Sample Plugins |

| nadi-book | Source for this NADI Book |

Workflow

A Typical workflow in NADI System consists of the follwing 4 processes:

- Download Data

- Pre-Process Data

- Network Detection (using NADI GIS)

- Network Analysis (Using NADI System’s DSL, and Plugins)

- Post Process

The figure below shows the order of how the components of the NADI System (blue) is used along side external tools (black).

Here the numbers in red circles are the order of use for different tools. Here the “Run Tasks” step represents running the NADI DSL Code in the System, so it is further divided into the tasks inside the code. The numbers on the blue circles show a typical use case of the DSL to perform a research work.

For exact details on what a typical research workflow involving the NADI DSL is, refer to the examples.

NADI GIS

Geographic Information (GIS) Tool for Network Detection. The main purpose of the NADI GIS is to find the network connectivity between a set of points using a stream network (which can be developed from elevation models, or downloaded from national databases).

NADI GIS can be used as a terminal command or QGIS plugin, refer to installation section for how to install it.

NADI Task System

Task System is a Domain Specific Programming Language (DSL) that is designed for river network analysis. This is the main core of the network analysis. This is included when you install NADI as a library, CLI or GUI.

NADI Plugins

The functions available to call in the task system comes from plugins. There are many internal plugins with core functions already available, while users can load their own plugins for other functions.

Refer to the plugins section of the book for more details on how to use plugins, how to write them and what to keep in mind while using them.

NADI libraries

Rust and Python library to use in your programs. Rust library nadi_core is available to download/use from cargo with the command cargo add nadi_core.

While Python library requires you to clone the repo and build it with maturin (for now).

Future plan for it includes publishing it in pypi.

Rust Libraries

If you are not writing your own rust programs or plugins, you can skip this section.

There are three rust libraries:

| Library | Use |

|---|---|

nadi_core | Core library with data types, and plugin structure |

nadi_plugin | Rust Procedural macro library to write nadi plugins |

string_template_plus | Library for string templates with variables |

Everything is loaded by nadi_core so you don’t need to load them separately.

NADI Python

While using NADI from python library, you only have access to nadi data types (Node, Network, etc), and the plugin functions, which are enough for most cases as python language syntax, variables, loops etc will give you a lot of flexibility on how to do your own analysis. The python module is structured as follows:

nadi [contains Node, Network, etc]

+-- functions

| +-- node [contains node functions]

| +-- network [contains network functions]

| +-- env [contains env functions]

+-- plugins

+-- <plugin> [each plugin will be added here]

| +-- node [contains node functions]

| +-- network [contains network functions]

| +-- env [contains env functions]

+-- <next-plugin> and so on ...

The functions are available directly through functions submodule, or through each plugin in plugins submodule. An example python script looks like this:

import nadi

import nadi.functions as fn

net = nadi.Network("data/ohio.network")

for node in net.nodes:

try:

_ = int(node.name)

node.is_usgs = True

# this just shows how nadi functions can be called from python

# for simple functions please use the python native functions

print(fn.node.render(node, "Node {_NAME} is USGS Site"))

except ValueError:

node.is_usgs = False

This code shows how to load a network, how to loop through the nodes, and use python logic, or use nadi functions for the node and assign attributes.

More detail on how to use NADI from python will be explained in NADI Python chapter.

NADI CLI

Command Line Interface to run NADI Tasks.

This can run nadi task files, syntax highlight them for verifying them, generate markdown documentations for the plugins. The documentations included in this book (Function List and each plugin’s page like Attributes Plugin attributes) are generated with that. The documentation on each plugin functions comes from their docstrings in the code, please refer to how to write plugins section of the book for details on that.

The available options are shown below.

Usage: nadi [OPTIONS] [TASK_FILE]

Arguments:

[TASK_FILE] Tasks file to run; if `--stdin` is also provided this runs before stdin

Options:

-C, --completion <FUNC_TYPE> list all functions and exit for completions [possible values: node, network, env]

-c, --fncode <FUNCTION> print code for a function

-f, --fnhelp <FUNCTION> print help for a function

-g, --generate-doc <DOC_DIR> Generate markdown doc for all plugins and functions

-l, --list-functions list all functions and exit

-n, --network <NETWORK_FILE> network file to load before executing tasks

-p, --print-tasks print tasks before running

-P, --new-plugin <NEW_PLUGIN> Create the files for a new nadi_plugin

-N, --nadi-core <NADI_CORE> Path to the nadi_core library for the new nadi_plugin

-s, --show Show the tasks file, do not do anything

-S, --stdin Use stdin for the tasks; reads the whole stdin before execution

-r, --repl Open the REPL (interactive session) before exiting

-t, --task <TASK_STR> Run given string as task before running the file

-h, --help Print help

-V, --version Print version

NADI IDE

NADI Integrated Development Environment (IDE) is a Graphical User Interface (GUI) for the users to write/ run NADI Tasks.

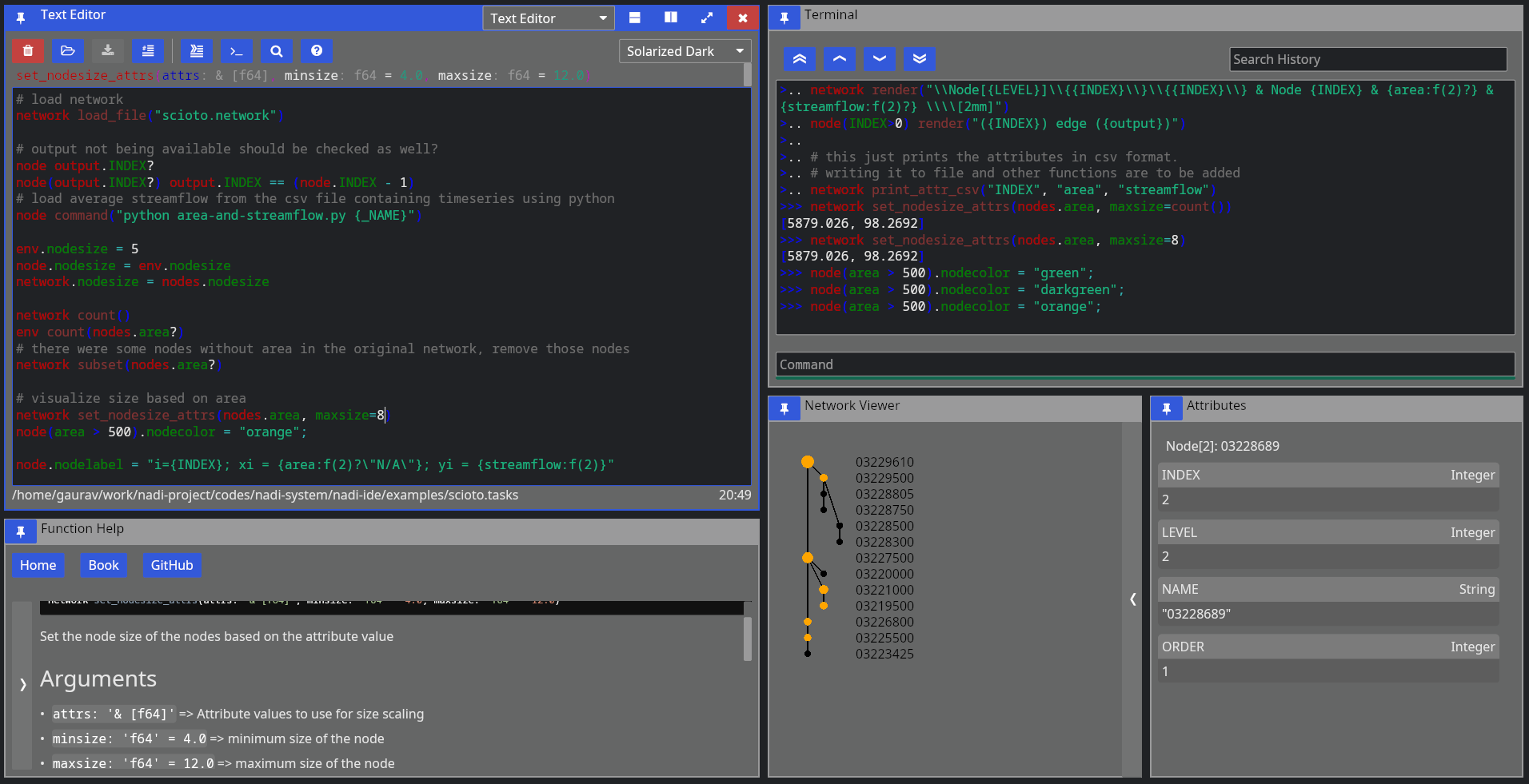

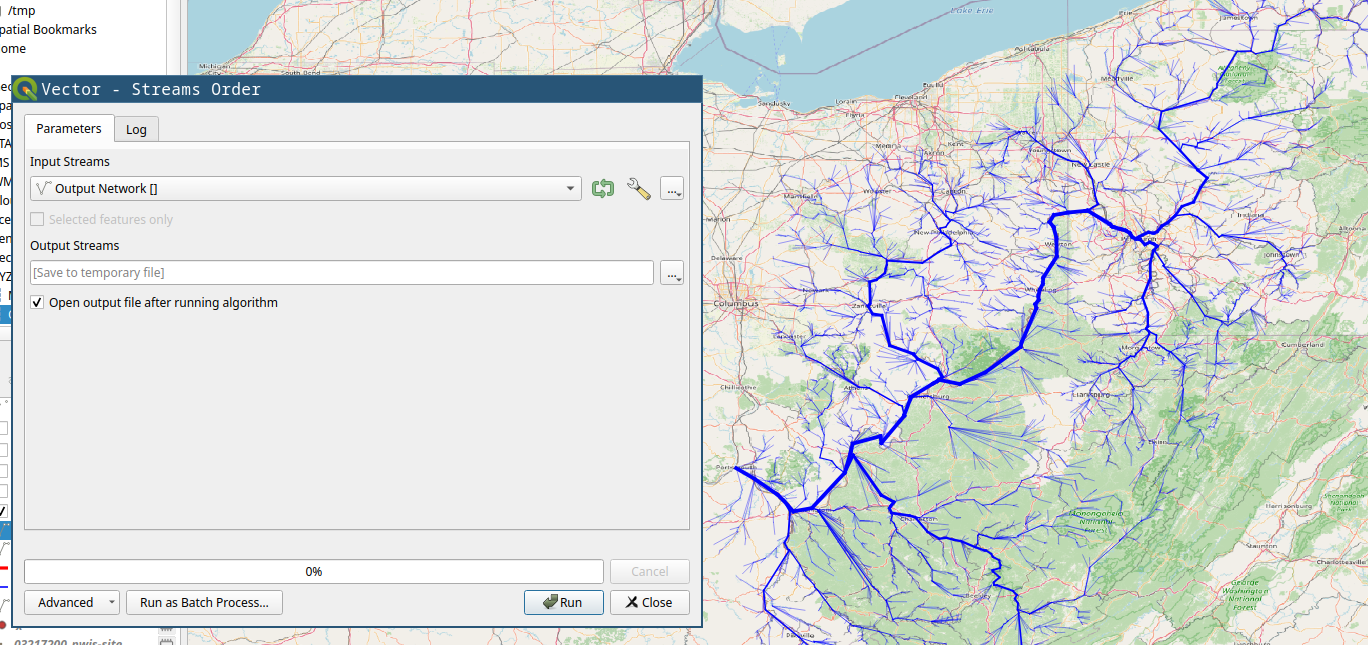

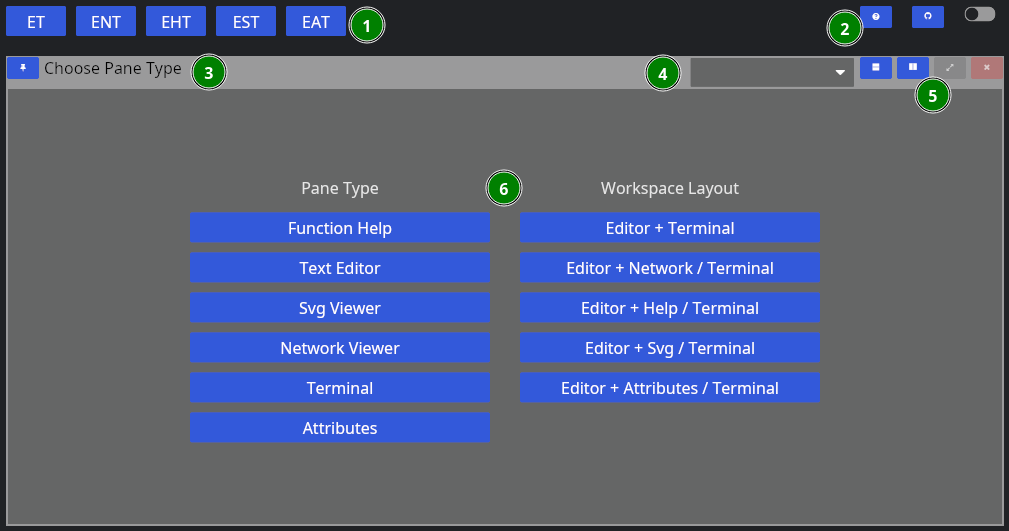

As seen in the image below, IDE consists of multiple components arranged in a tiling manner. You can drag them to move them around and build your own layout. When you start IDE it suggests you some layouts and what to open. You can use the buttons on the top right of each pane to:

- change pane type

- vertically split current pane

- horizontally split current pane

- fullscreen current page/ restore layout if it’s fullscreen

- close current pane

It has the following components:

Text Editor

Open text files, edit and save them.

It comes with syntax highlighting for most languages. And custom highlight for tasks and network files.

For Tasks file, it can also show you function signatures on top so you can write tasks easily, knowing what arguments the function needs and what the default values are.

While open inside IDE, it can also run the tasks by sending them to the terminal, or search help documentations on functions. Hover over the buttons on the top row to see which button does what, and the keyboard shortcut to use them as well.

Terminal

Terminal is there so you can run NADI in a interactive session. Read Eval Print Loop (REPL) of NADI here is meant mostly to be used inside the IDE to evaluate the tasks from editor, but you can open it independently as well.

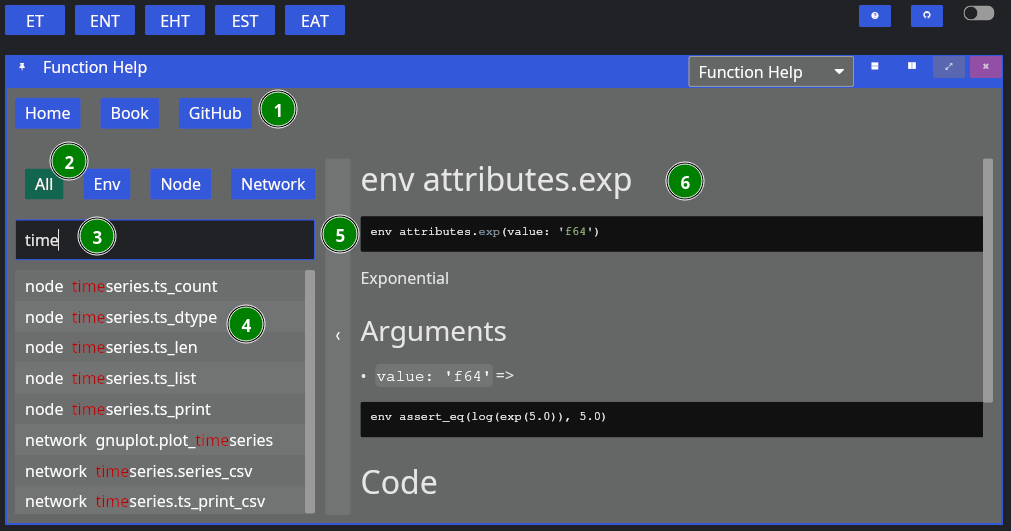

Function Help

This is a GUI with the list of all available plugin functions. You can expand the sidebar on left to search and browse functions. You can filter by type of function (node, network, env) with the buttons. When you click a function you can read its documentation on the right side.

Capabilities of the iced GUI libraries are limited right now, so you cannot select or copy text from the help. Please refer to the documentation online to do that. Or generate the documentation locally using nadi-cli tool.

Network Viewer

This is a pane where network is visualized, this is a very basic visualization to see the connections and is not optimized for drawing. Please avoid using this pane (making it visible) in case of large networks as it takes a lot of computation to draw this each frame.

Attribute Browser

When you click on a node on Network Viewer it will open/update showing the attributes of that node. There is no way to edit the attributes from here, which is intensional design as attributes should be assigned from tasks so that they are reproducible. For temporary assignments use the terminal.

SVG Viewer

This is a basic utility that can open a SVG file from disk and visualize it. You can click the refresh button to re-read the same file. This is intended for a quick way to check the SVG saved/exported from tasks. This is not a full fledge SVG renderer, so open them in image viewers or browsers to see how it looks.

Trivia

- Nadi means River in Nepali (and probably in many south asian languages).

- First prototype of NADI was Not Available Data Integration, as it was meant to be an algorithm to fill data gaps using network information, but it was modified to be more generic for many network related analysis.

Installation

NADI System is a suite of software packages each have different

installation methods. Some of the packages are uploaded to crates.io

(rust) and pypi (python). For others, you can either get the

compiled binaries from the Releases page of the

github repo [windows]. Or you can get the source code using git, and using

cargo build the packages [all OS].

| Program | Linux | Windows | Mac | Android (Termux) |

|---|---|---|---|---|

| NADI CLI | yes | yes | yes | yes |

| NADI IDE | yes | yes | yes | no |

| mdbook nadi | untested | yes | untested | yes |

nadi-py | yes | yes | yes | yes |

| QGIS Plugin | yes | yes | untested | no |

Untested means it should work in theory, but I have not tested it.

Packages

For nadi-py you can use pip:

pip install nadi-py

For nadi-cli you can use cargo:

cargo install nadi

Downloading Binaries

Goto the repo of each component and refer to the releases section for binaries of different versions.

To setup the nadi-systm to load the plugins you have to place them inside the directory included in the NADI_PLUGIN_DIRS environmental variable. Refer to your Operating System’s documentation on how to set environemental variables.

The binaries should be able to run directly without needing extra steps. If you get a security warnings because the binaries are not signed, you might have to ignore it.

For QGIS Plugin, you can install it from Plugins Wizard on QGIS if you turn on experiemental plugins. But the nadi-gis binary should be available from PATH (i.e. you can call it from terminal).

Building from Source

This is currently the preferred way of installing nadi-system (and nadi-gis for Linux and MacOS). Although it includes a bit more steps this makes sure the compiled program is compatible with your OS.

Prerequisites

The prerequisites for building from source are:

git[Optional]: to clone the repo, you can directly download zip from githubcargo: To build the binaries from source.gdal[Optional]: Only fornadi_gisbinary and plugin.

To install git refer to the instructions for your operating system from the official page.

For cargo follow the instructions to install rust toolsets for your operating system from the official page

Installing gdal can be little complicated for windows. For Linux, use your package manager to install gdal and/or gdal-dev package. Mac users can also install gdal using homebrew. For windows, follow the instructions from official website, after installation you might have to make some changes to environmental variables to let cargo know where your gdal binaries/header files are for the compilation to be successful. More details will be provided in the NADI GIS section.

If you use Linux or Mac (with homebrew), then the installation of prerequisites should be easy. But if you do not have the confidence to setup gdal for compiling nadi_gis use the binaries provided for them from the previous steps.

NADI System

It will build the binaries for nadi, nadi-ide, nadi-help, nadi-editor, etc. nadi is the command line interface to run nadi tasks, parse/validate syntax etc. While nadi-ide is the program to graphically develop nadi tasks and run them.

Assuming you have git and cargo,

git clone https://github.com/Nadi-System/nadi-system

cd nadi-system

cargo build --release

To run one of the binary from nadi system, use the command cargo run with binary name.

For example, the following will run the nadi-ide:

cargo run --release --bin nadi-ide

The compiled binaries will be saved in the target/release directory, you can copy them and distribute it. The binaries do not need any other files to run.

The plugins files if present in the system are automatically loaded from NADI_PLUGIN_DIRS environmental variable. Look into installing the plugin section below.

Note: all programs will compile and run in Windows, Linux, and MacOS, while only nadi-cli and mdbook-nadi will run in Android (tmux). nadi-ide and family need the GUI libraries that are not available for android (tmux) yet.

NADI GIS

NADI GIS uses gdal to read/write GIS files, so it needs to be installed. Please refer to gdal installation documentation for that.

Windows

First download compiled gdal from here:

- https://www.gisinternals.com/sdk.php Then download clang from here:

- https://github.com/llvm/llvm-project/releases

Extract it into a folder, and then set environmental variables to point to that:

GDAL_VERSION: Version of gdal e.g. ‘3.10.0’LIBCLANG_PATH: Path to thelibdirectory ofclangGDAL_HOME: Path to thegdalthat has the subdirectories likebin,lib, etc.

You can also follow the errors from the rust compilers as you compile to set the correct variables.

Finally you can get the source code and compile nadi-gis with the following command:

git clone https://github.com/Nadi-System/nadi-gis

cd nadi-gis

cargo build --release

This will generate the nadi-gis binary and gis.dll plugin in the target/release folder, they need to be run along side the gdal shared libraries (.dlls). Place the binaries in the same folder as the dlls from gdal and run it. To use the gis.dll plugin from nadi, nadi-ide, etc. same thing applies there, those binaries should be run with the gdal’s dlls to be able to load the gis plugin.

Linux and Mac

Assuming you have git, cargo, and gdal installed in your system you can build it like this:

git clone https://github.com/Nadi-System/nadi-gis

cd nadi-gis

cargo build --release --features bindgen

The bindgen feature will link the nadi-gis binary with the gdal from your system. So that you do not have to distribute gdal with the binary for your OS.

If you do not have gdal installed in your system, then you can still build the nadi-gis without the bindgen feature. This will still require gdal to be available and distributed with the binary.

cargo build --release

QGIS Plugin

The nadi-gis repo also contains the QGIS plugin that can be installed to run it through QGIS. The plugin will use the nadi-gis binary in your PATH if available. And it also contains the nadi plugin that can be loaded into the nadi system to import/export GIS files into/from the system.

You can download the zip file for plugin from releases page, and use the “Install from Zip” option on QGIS plugins tab. Or copy the nadi directory inside qgis to your python plugin directory for qgis.

Refer to the QGIS plugins page for more instructions. In future we are planning on publishing the plugin so that you can simply add it from QGIS without downloading from here.

NADI GIS Plugin

The NADI plugin on this repo provides the functions to import attributes, geometries from GIS files, and export them into GIS files.

NADI Plugins

Out of the two types of plugins, the executable plugins are just simple commands, they do not need to be installed along side NADI System, just make sure the executables that you are using from NADI System can be found in path. A simple way to verify that is to try to run that from terminal and see if it works.

The compiled plugins can be loaded by setting the NADI_PLUGIN_DIRS environmental variable. The environment variable should be the path to the folder containing the nadi plugins (in .dll, .so, or .dylib formats for windows, linux and mac). You can write your own plugins based on our examples and compile them.

Officially available plugins are in the nadi-plugins-rust directory.

Assuming you have git and cargo,

git clone https://github.com/Nadi-System/nadi-plugins-rust

cd nadi-gis

cargo build --release

The plugins will be inside the target/release directory. Copy them to the NADI_PLUGIN_DIRS directory for nadi to load them.

You can take any one of the plugins as an example to build your own, or following the plugin development instructions from the plugins chapter.

Plugins

There are two types of nadi plugins. Compiled plugins (shared libraries) are loaded dynamically from shared libraries, while executable plugins are called as shell commands. Refer to Plugins section of core concepts for more details.

Compiled Plugins

Compiled plugins are shared libraries (.so in linux, .dll in windows, and .dylib on macOS). They can be generated by compiling the nadi plugin in rust, or you can download the correct plugin for your OS and nadi_core version from the plugin repositories. It is recommended to only use plugins from trusted source.

To setup the nadi-systm to load the compiled plugins you have to place them inside the directory included in the NADI_PLUGIN_DIRS environmental variable. Refer to your Operating System’s documentation on how to set environemental variables.

The compiled plugins are loaded when NADI is starting up, there is no way to hot load or reload the plugins, so you need to reopen the nadi program itself (CLI, IDE, etc) if you want to load new/updated plugin functions.

Once the plugins are loaded, the functions are directly available from the nadi task system, they’ll act similar to the internal plugin functions.

Executable Plugins

Executable plugins are terminal commands, you set it up as you’d set any other terminal programs, by making sure the program is in PATH and can be executed from terminal. Linux and Mac do them mostly by default, while in Windows you might have to check the box saying something along the lines of “include this in path” during installation, or manually edit the PATH in “Environment Variables”.

For example, if you want to call python scripts, make sure you can run python --version in terminal and get a response.

You can also check it using the command function:

network command("python --version", echo=true)

network command("Rscript --version", echo=true)

network command("julia --version", echo=true)

Results:

$ python --version

Python 3.13.5

$ Rscript --version

Rscript (R) version 4.5.1 (2025-06-13)

$ julia --version

julia version 1.11.6

Here we can see, the commands that ran successfully and returned a version are valid.

To write scripts and run them from nadi refer to Executable Plugins section on Plugin Developer Guide.

NADI GIS

NADI GIS is available as a CLI tool and QGIS plugin, the CLI tool has the following functions:

Usage: nadi-gis [OPTIONS] <COMMAND>

Commands:

nid Download the National Inventory of Dams dataset

usgs Download data from USGS NHD+

layers Show list of layers in a GIS file

check Check the stream network to see outlet, branches, etc

order Order the streams, adds order attribute to each segment

network Find the network information from streams file between points

help Print this message or the help of the given subcommand(s)

Options:

-q, --quiet Don't print the stderr outputs

-h, --help Print help

The important functions are:

- Download NID and USGS NHD+ data,

- Check stream network for validity of DAG (Directed Acyclic Graph) required for NADI,

- Stream ordering for visual purposes,

- Network detection between points of interest using the stream network

You can use the help command for each one of the subcommand for more help.

For example, usgs subcommand’s help using nadi-gis help usgs gets us:

Download data from USGS NHD+

Usage: nadi-gis usgs [OPTIONS] --site-no <SITE_NO>

Options:

-s, --site-no <SITE_NO>

USGS Site number (separate by ',' for multiple)

-d, --data <DATA>

Type of data (u/d/t/b/n)

[upstream (u), downstream (d), tributaries (t), basin (b), nwis-site (n)]

[default: b]

-u, --url

Display the url and exit (no download)

-v, --verbose

Display the progress

-o, --output-dir <OUTPUT_DIR>

[default: .]

-h, --help

Print help (see a summary with '-h')

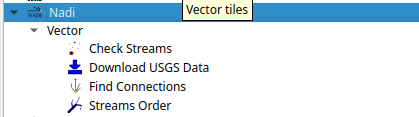

NADI QGIS

The QGIS plugin for nadi has a subset of the CLI functionality. It can be accessed from the Processing Toolbox.

You can run the tools from there and use the layers in QGIS as inputs. The QGIS plugin will first try to find nadi-gis binary on your PATH and use it, if not it’ll try to use the binary provided with the plugins. It is preferred to have nadi-gis available in PATH and running without errors.

Example

The examples here will be given using QGIS plugin, and using the CLI tool both. CLI tool is great for quickly running things, and doing things in batch, while QGIS plugin will be better on visualization and manual fixes using other GIS tools.

If you want a video demostration, there is a Demo Video on YouTube.

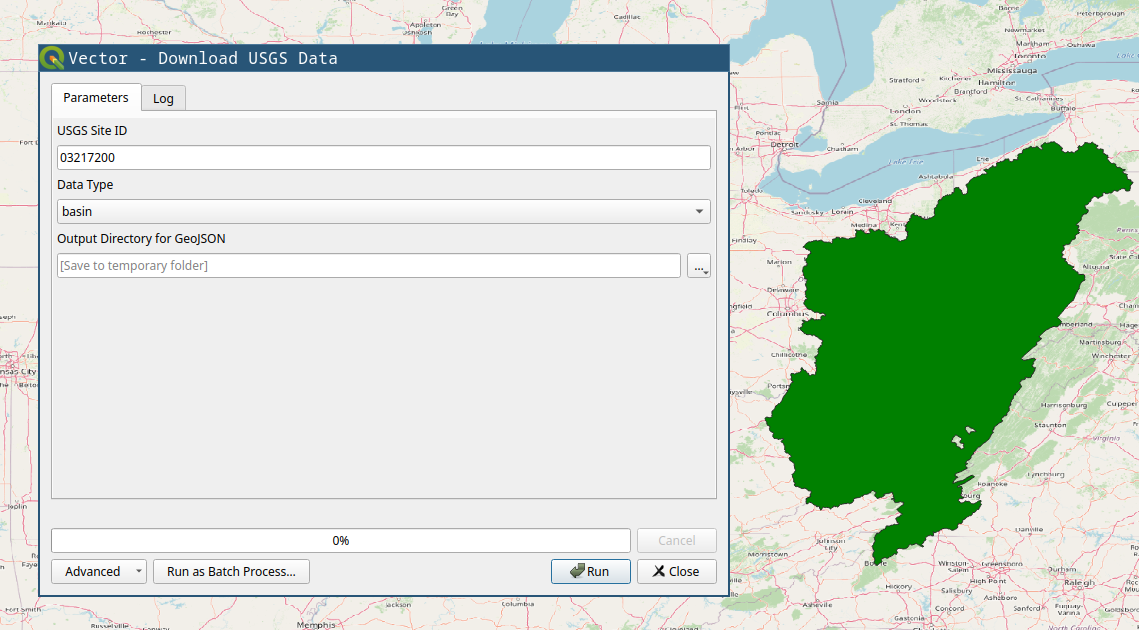

Using QGIS Plugin

First downloading the data is done through the Download USGS Data tool. As shown in the screenshot below, input the USGS site ID and the data type you want to download.

You will need, tributaries for the upstream tributaries for network, and nwis-site will download the USGS NWIS sites upstream of the location. We will use those two for the example. If you have national data from other sources, you can use the basin polygon to crop them.

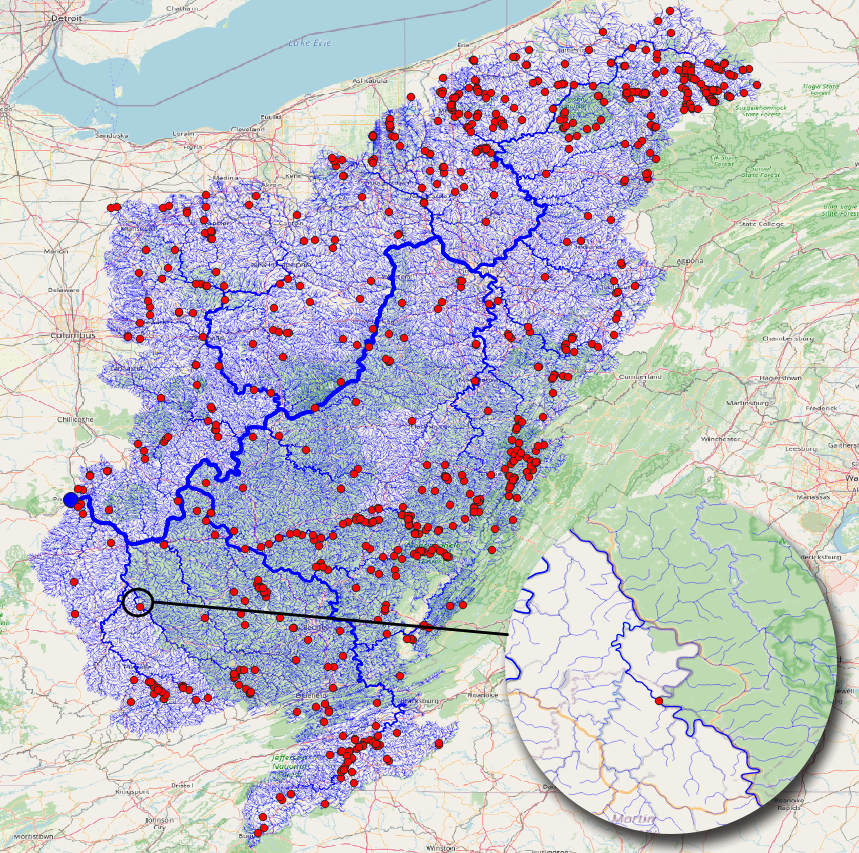

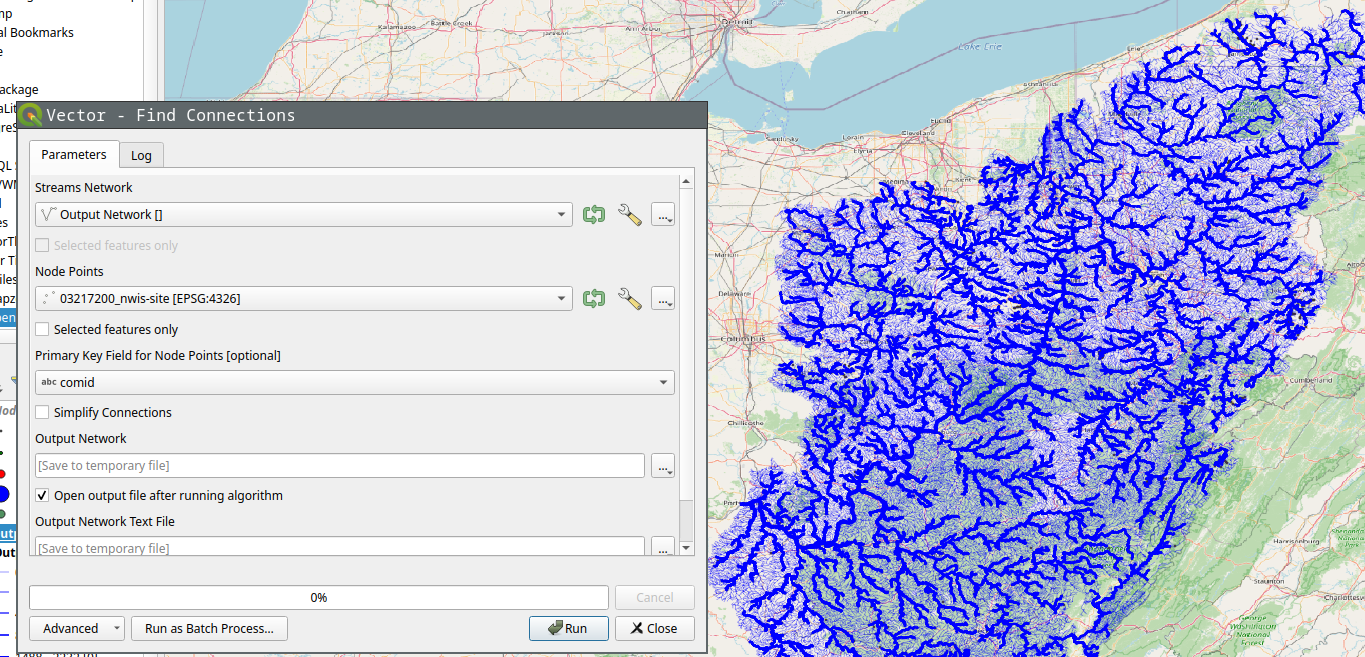

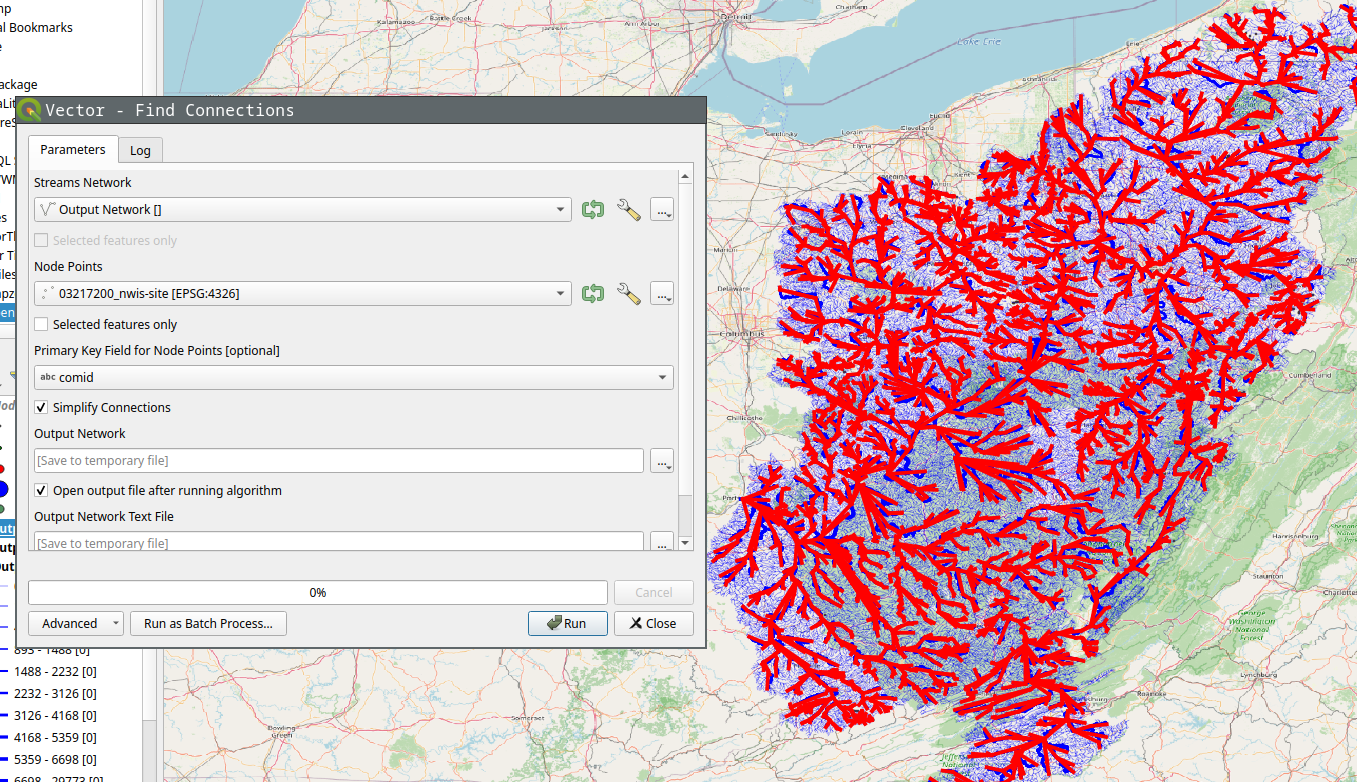

Stream Order tool is mostly for visual purposes. The figure below shows the results from stream order on right compared to the raw download on left.

After you have streams (tributaries), you can use the Check Streams tool to see if there are any errors. It will give all the nodes and their categories, you can filter them to see if it has branches, or if it has more than one outlet. The figure below shows the branches with red dot. If we zoom in we can see how the bifurcation on the stream is detected, and how stream order calculation is confused there.

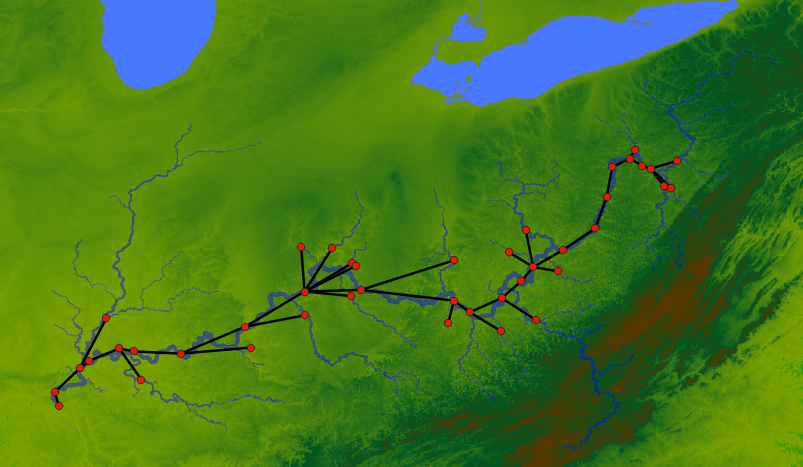

Find Connections tool will find the connection between the points using the stream network. The results below shows the tool being run on the NWIS points.

If we select simplify option, it’ll only save the start and end point of the connection instead of the whole stream.

Of course you can run Stream Order on the results to get a more aesthetically pleasing result.

Using CLI

An example of running nadi-gis using CLI can be done in the following steps:

Download data

We’ll download the streamlines and the NWIS Sites from USGS for station 03217200 (Ohio River at Portsmouth, OH).

nadi-gis usgs -s 03217200 -d n -d t -o output/

This will download two files:

output/03217200_nwis-site.json output/03217200_tributaries.json

Now we can use check command to see if there are any problems with the streams.

nadi-gis check output/03217200_tributaries.json

That gives us the following output:

Invalid Streams File: Branches (826)

* Outlet: 1

* Branch: 826

* Confluence: 30321

* Origin: 29591

We can generate a GIS file to locate the branches and see if those are significant. Refer to the help for check or use the QGIS plugin.

And to find the connections, we use network subcommand like this:

nadi-gis network -i output/03217200_nwis-site.json output/03217200_tributaries.json

Output:

Outlet: 3221 (-82.996916801, 38.727624498) -> None

3847 -> 3199

2656 -> 2644

399 -> 1212

2965 -> 3942

2817 -> 6236

5708 -> 4733

2631 -> 5741

201 -> 2101

2066 -> 2317

3770 -> 1045

... and so on

Since this is not as useful, we can use the flags in the network subcommand to use a different id, and save the results to a network file.

First we can use layers subcommand to see the available fields in the file:

nadi-gis layers output/03217200_nwis-site.json -a

which gives us:

03217200_nwis-site

- Fields:

+ "type" (String)

+ "source" (String)

+ "sourceName" (String)

+ "identifier" (String)

+ "name" (String)

+ "uri" (String)

+ "comid" (String)

+ "reachcode" (String)

+ "measure" (String)

+ "navigation" (String)

Using comid as the id for points, and saving the results:

nadi-gis network -i output/03217200_nwis-site.json output/03217200_tributaries.json -p comid -o output/03217200.network

The output/03217200.network file will have the connections like:

15410797 -> 15411587

6889212 -> 6890126

8980342 -> 10220188

19440469 -> 19442989

19390000 -> 19389366

6929652 -> 6929644

... and so on

Make sure you use a field with unique name, and valid identifier in NADI System.

Getting Started

This section walks you throuh the process of using NADI system through the CLI and IDE.

Network analysis is done through the Domain Specific Programming Language. This is the main feature of NADI.

You can run the DSL, called tasks through the CLI, or through the IDE. Refer to the Installation for how to install them.

Recommended way to develop/write nadi tasks is with IDE, while CLI can be used to automate/batch run it once the tasks are finalized.

Command Line Interface (CLI)

NADI CLI is available as nadi command when you have it installed. You can run it in interactive mode in a Read Eval Print Loop (REPL), or provide a file to run.

So assuming you have the following contents in sample.tasks file:

network load_str("a -> b")

node array(LEVEL, ORDER)

You run it with:

nadi sample.tasks

You should get the following output:

{

b = [0, 2],

a = [0, 1]

}

If you run nadi with --repl or -r flag, then it’ll open a REPL. If you have a tasks file provided like before, it’ll run the tasks in the file before entering a REPL, otherwise it’ll start from empty context.

For more details on other use of nadi command. Refer to the output of nadi --help. It has options for,

- Evalute some tasks before running the file/repl for setting context,

- Print/Generate help for functions,

- Inspect code for functions,

- Autocomplete nadi functions,

- Generate a template for nadi plugin code, and more.

Integrated Development Environment (IDE)

NADI IDE contains multiple components to make it easier to write, edit, and run tasks, as well as visualize the network and browse the function documentation.

As you can see, the main UI is divided into multiple panes, which are independent components that you can resize, arrange the way you want.

First, when you start NADI IDE, you come up with this view:

- Different layout options

- Global options

- Pane Title Bar

- Dropdown to change the Pane Type

- Pane options (horizontal/vertical split, fullscreen, close)

- Pane Contents

The layout of each pane are similar, while each one will have their own contents. For example, “Function Help” contains the help for the plugin functions. We will go over each one in a separate chapter.

You can choose a singular pane type here, or chose a combination of panes that feels more useful. Some panes like terminal/attribute views also spawn automatically (if not already in view) when you try to run tasks, or click nodes.

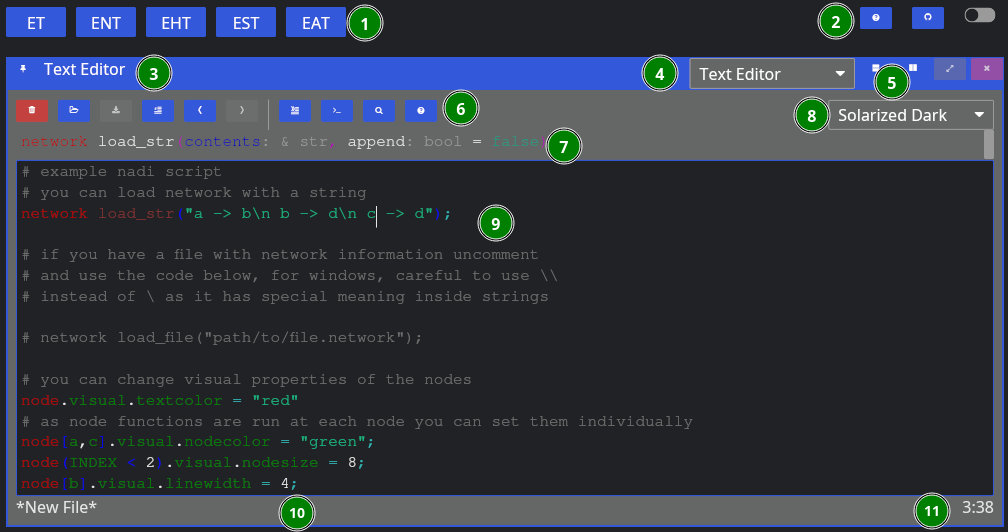

Now, let’s choose “Text Editor” for now, then we’re greeted with this view:

Now, aside from the global components, we have:

- Editor Tools,

- Function Signature: Only shows when cursor is on a function,

- Editor Theme: Only works for non-NADI formats like Python, C, Rust, R, etc.

- Tasks File Contents: File contents with syntax highlighting,

- Current File Path: Where the file is opened from and will be saved, and

- Line/Column of Cursor.

As for the editor tools: you can hover over them to know their names, and shortcuts. We’ll explain them shortly below in the left to right order,

| Button | Key | Function |

|---|---|---|

| New File | Ctrl + n | Remove the contents, and the filepath for new file |

| Open | Ctrl + o | Browse and open a new file |

| Save | Ctrl + s | Save the file, browse for new file if path is not given |

| Toggle Comment | Alt + ; | Comment or uncomment the current selection |

| Undo | Ctrl + z | Undo the last edit (edits are saved periodically) |

| Redo | Ctrl + y | Redo the last undo (redo vanishes if you edit after undo) |

| Run Line/Selection | Ctrl + Enter | Run the current line, or the selection in terminal |

| Run Buffer | Ctrl + Shift + Enter | Run the whole buffer (file contents) in terminal |

| Search | Search the selection in function help | |

| Help | Visit the function help for current function |

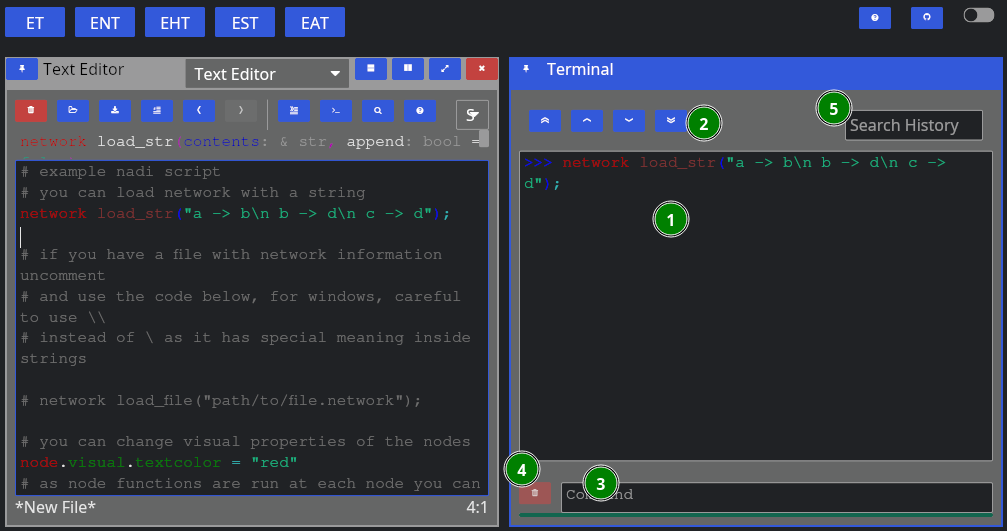

When yo run a line using “Run Line/Selection” it should spawn a terminal pane on the right side

Here we have:

- Terminal Contents: tasks that were run, and their output,

- Terminal Navigation: Buttons to goto top/bottom or page up/down,

- Command Entry: You can directly enter commands here instead of from editor (they won’t be saved),

- Clear: Clear the current command entry,

- History: Search here for previous commands, clicking them will copy it to the Command Entry

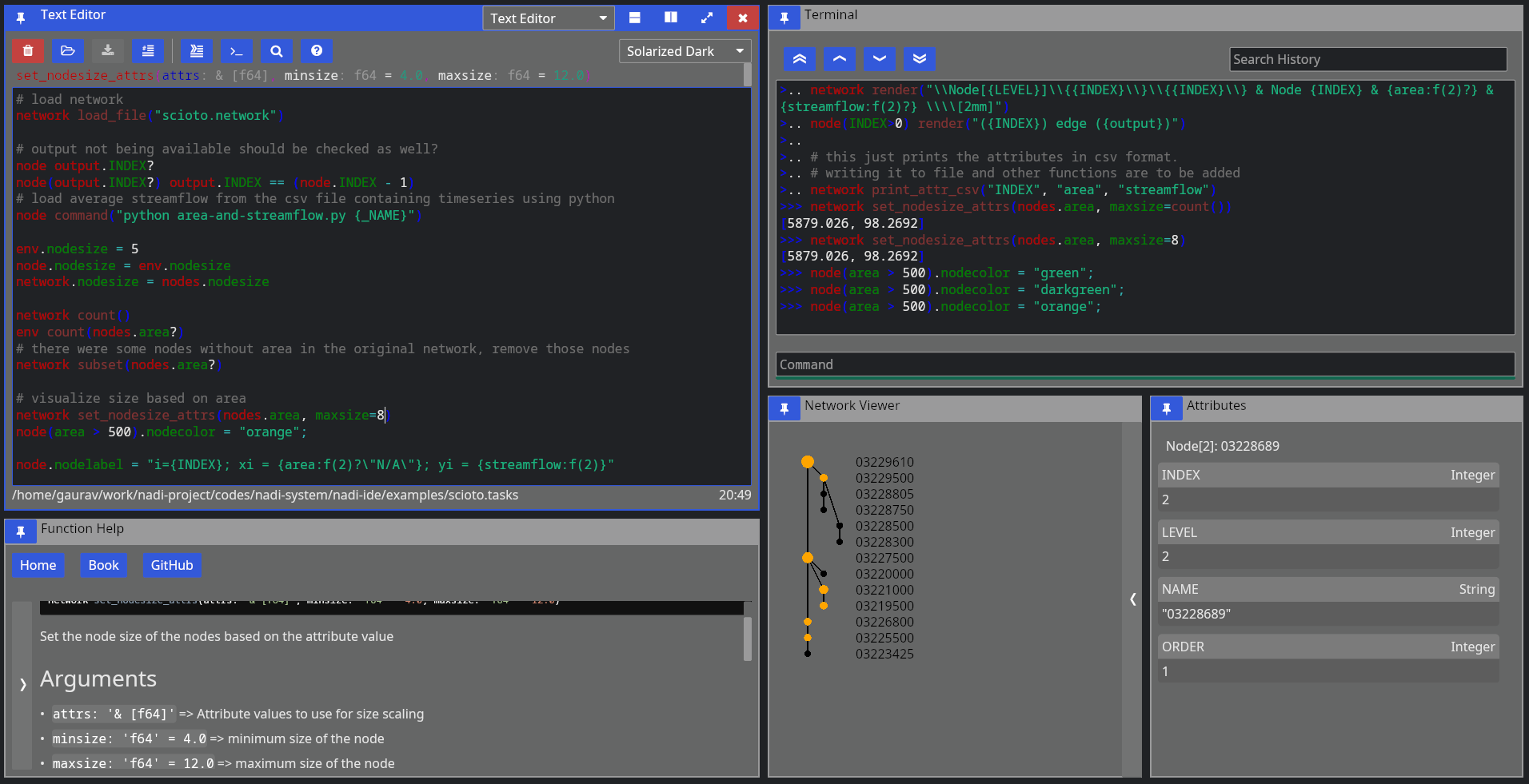

Upto here will be enough to run tasks. But the advantage of the IDE with a Graphical User Interface (GUI) comes in the form of network visualization. If you open the Network Viewer pane, either through the dropdown to change one of the pane. Or split the panes and open Network Viewer there, you can see the network visualized.

The figure below shows the network viewer, and the attribute viewer (it spawns when you click on a node in the network) on the bottom right.

You can click on the “<” button on the right side of the network viewer to expand the sidebar that has details on how to change the visual attributes of the nodes. You can run the examples in the code for testing them out as well.

Extra components of the IDE includes the Function Help that you can use to browse the plugin functions’ documentation, and SVG viewer that you can use to open SVG files. The SVG viewer is very primitive and only meant to be a quick check/update while you’re fine tuning an exported SVG file, use dedicated softwares and web browsers for actual viewing.

The function help looks like below:

The figure contains,

- Buttons for Reset, and links to NADI Book and Github,

- Buttons to filter search by function type,

- Text Entry to search the function,

- List of plugin functions,

- Bar to collapse/expand the function search sidebar, and

- Plugin function documentation content.

Currently you cannot copy the code or any contents from the documentation, it is a limitation of the GUI library that might be fixed in the future. If you want to copy the text, refer to the web version of the function help (in NADI Book). Or use the help [type] <function> task. For example:

help node render

Results:

node render (template: '& Template', safe: 'bool' = false)

Render the template based on the node attributes

# Arguments

- `template: & Template` String template to render

- `safe: bool` [def = false] if render fails keep it as it is instead of exiting

For more details on the template system. Refer to the String

Template section of the NADI book.

```task

network load_str("a -> b")

node.x = 13

node assert_eq(render("abc {x}"), "abc 13")

```

You can copy the example code from the output in the terminal.

That should be enough for you to be able to use the GUI, in the next section we’ll talk about the core concepts in NADI system that you need to understand, and then we’ll show some examples you can run using what you learned in this chapter.

Core Concepts

This section contains a brief explanation of core concepts.

The main concepts that you need to know are:

-

Attributes are values, it can be float, integer, boolean, strings, or list of attributes, or a map of attributes (key=value),

-

Nodes are points in the network, they can have attributes, input nodes and an output node,

-

Network is a collection of nodes, network can also have attributes, Network used in the NADI System can have only one outlet, so a ‘ROOT’ node is added if there are multiple outlet. And loading a network that is not a directed tree is undefined behaviour.

-

Expression is something that can be evaluated or executed, it consists of literal values (attributes), variables (node, network, env variables that could hold attributes), function calls, or a mathmatical or logical operation.

-

Functions in nadi are of 3 types, env functions are normal functions that take values and run, network functions take values and run on the network, while node functions run at each node (they also provide a way to subset which nodes to run it on).

-

Task is an execution body of the task system. It can be of env, network or node type. It can be conditional (If-Else) or loop (While) consisting of more tasks inside it. Task can assign values to the env/network/node attributes, or call mutable functions on the top level.

-

String Template: Some functions take string inputs that are interpreted dynamically to represent different strings based on variables.

-

Plugins provide the functions used by the nadi task system. There are internal plugins and external plugins. Internal plugins comes with the installation, while external plugins are loaded from dynamic libraries.

Keywords

| Keyword | Description |

|---|---|

| node | the node task type, function or variable |

| network/net | the network task type, function or variable |

| env | the environment task type, function or variable |

| exit | exit the program |

| end | end the execution of tasks without exiting |

| help | display help for functions |

| inputs | get node variables or function output for input nodes of a node |

| output | get node variable or function output for output node of a node |

| nodes | get node variable or function output for all nodes in the network |

| if | if statement for conditional task/expression |

| else | else statement for conditional task/expression |

| while | while statement for loop task |

| in | binary operator to check if something is in another (list/string) |

| match | binary operator to check patterns on string (regex) |

And here are some keywords reserved for future:

| Keyword | Description |

|---|---|

| function/func | user defined functions |

| map | map values in an array/attrmap to a function |

| attrs | attributes of the env/node/network |

| loop | loop task |

| for | for loop task for looping through array/attrmap |

Symbols

Some special symbols and their functions are listed below:

.dot accessor for variables, e.g.node.var,node.var.another, etc.,?variable check (only used after a variable) e.g.node.var?evaluates to atrueorfalse,(),{},[]brackets for functions/expressions, attrmaps and arrays,;suppress current task output (only used at the end of a task),+,-,*,/, and//are mathematical operators,&,|, and!are logical operators,>,<,>=,<=, and==are comparision operators,->path operator (only used in node propagation, or network),=is assignment operator,#starts a comment,

There might be more functions of each symbol depending on the context.

Continue with the chapters for details on each concept. Or skip ahead to Learn by Examples if you want to jump into the examples.

Task

Task is an execution body in the task system. There are different types of tasks, specially environment, network and node type tasks, and there can be conditional tasks that only execute based on a condition or loops.

Some examples of different tasks are given below to show a general overview, but the concepts inside the tasks system will be introduced as we progress through the chapters,

Environment tasks that can evaluate expressions, assign variables, or call functions:

env 1 + 2 * 8

env render("my name is {_name}", name="John")

env.x = 12 > 2;

env.x

Results:

17

"my name is John"

true

network task loading a network, and node task getting node attributes:

network load_str("a->b\nb->c")

node.NAME

Results:

{

c = "c",

b = "b",

a = "a"

}

Conditional and Loop task

if ( !val? | (val > 5) ) {

# if val is not defined or greater than 5, set it to 0

env.val = 0

}

while (val < 5) {

env.val = env.val + 1

}

Results:

0 -> 1

1 -> 2

2 -> 3

3 -> 4

4 -> 5

Tasks system acts like a scripting language for nadi system. A Task consists of getting/evaluating/setting attributes in environment, network or nodes. The value that can be evaluated are expressions that consists of literal values, variables, or function calls that can either be a environment, node or a network function. Functions are unique based on their names, and can have default values if users do not pass all arguments.

The code examples throughout this book, that are being used to generate network diagrams, tables, etc are run using the task system.

Here is an example contents of a more complex task file, do not concern with what each task does, we will go through them in other chapters.

# sample .tasks file which is like a script with functions

node<inputsfirst> print_attrs("uniqueID")

node show_node()

network save_graphviz("/tmp/test.gv")

node<inputsfirst>.cum_val = node.val + sum(inputs.cum_val);

node[WV04113,WV04112,WV04112] print_attr_toml("testattr2")

node render("{NAME} {uniqueID} {_Dam_Height_(Ft)?}")

node list_attr("; ")

# some functions can take variable number of inputs

network calc_attr_errors(

"Dam_Height_(Ft)",

"Hydraulic_Height_(Ft)",

"rmse", "nse", "abserr"

)

node sum_safe("Latitude")

node<inputsfirst> render("Hi {SUM_ATTR}")

# multiple line for function arguments

network save_table(

"test.table",

"/tmp/test.tex",

true,

radius=0.2,

start = 2012-19-20,

end = 2012-19-23 12:04

)

node.testattr = 2

node set_attrs_render(testattr2 = "{testattr:calc(+2)}")

node[WV04112] render("{testattr} {testattr2}")

# here we use a complicated template that can do basic logic handling

node set_attrs_render(

testattr2 = "=(if (and (st+has 'Latitude) (> (st+num 'Latitude) 39)) 'true 'false)"

)

# same thing can be done if you need more flexibility in variable names

node load_toml_string(

"testattr2 = =(if (and (st+has 'Latitude) (> (st+num 'Latitude) 39)) 'true 'false)"

)

# selecting a list of nodes to run a function

node[

# comment here?

WV04113,

WV04112

] print_attr_toml("testattr2")

# selecting a path

node[WV04112 -> WV04113] render("=(> 2 3)")

Attributes

Attributes are TOML like values. They can be one of the following types:

| Type Name | Rust Type | Description |

|---|---|---|

| Bool | bool | Boolean values (true or false) |

| String | RString | Quoted String Values |

| Integer | i64 | Integer values (numbers) |

| Float | f64 | Float values (numbers with decimals) |

| Date | Date | Date (yyyy-mm-dd formatted) |

| Time | Time | Time (HH:MM, HH:MM:SS formatted) |

| DateTime | DateTime | Date and Time separed by or T |

| Array | RVec<Attribute> | List of any attribute values |

| Table/AttrMap | AttrMap | Key Value pairs of any attribute values |

You can write attributes directly into the task system to assign them, use them in functions. You can also load attributes from a file into the env/node/network.

If you want to assign a attribute inside the task system, you can do it like this:

env.river = "Ohio River"

env.river

Results:

"Ohio River"

Example Attribute File that can be loaded:

river = "Ohio River"

outlet = "Smithland Lock and Dam"

outlet_is_gage = true

outlet_site_no = ""

streamflow_start = 1930-06-07

mean_streamflow = 123456.0

obs_7q10 = 19405.3

nat_7q10 = 12335.9

num_dams_gages = 2348

Here loading the files we can see only ohio has the attributes loaded

network load_file("./data/mississippi.net")

node[ohio] load_attrs("./data/attrs/{_NAME}.toml")

node.outlet

Results:

{

lower-mississippi = <None>,

upper-mississippi = <None>,

missouri = <None>,

arkansas = <None>,

red = <None>,

ohio = "Smithland Lock and Dam",

tenessee = <None>

}

With plugins, you can load attributes from different file types.

Node

A Node is a point in network. A Node can have multiple input nodes and only one output node. And a Node can also have multiple attributes identifiable with their unique name, along with timeseries values also identifiable with their names.

If you understand graph theory, then node in nadi network is the same as a node in a graph.

Nodes in NADI Network are identified by their name, that is loaded from the network file. Node names are string values, even if they are integer or float, they are read and internally stored as strings. If the node name contains characters outside of alphanumeric and underscore (_), it has to be quoted.

i.e. valid names like 123 or node_1 can appear unquoted or quoted, but names like node-123 needs to be quoted: "node-123".

network load_str("

123 -> node_1

node_1 -> \"node-123\"

")

node.NAME

Results:

{

node-123 = "node-123",

node_1 = "node_1",

123 = "123"

}

If you do not quote the name, you’ll get an error:

network load_str("123 -> node-1")

node.NAME

*Error*:

Error in function load_str: Error: Parse Error at Line 1 Column 8

123 -> node-1

^ Incomplete Path; expected node here

Network

A Network is a collection of nodes. The network can also have attributes associated with it. The connection information is stored within the nodes itself. But Network will have nodes ordered based on their connection information. So that when you loop from node from first to last, you will always find output node before its input nodes.

A condition a nadi network is that it can only be a directed graph with tree structure.

Example Network file:

# network consists of edges where input node goes to output node

# each line is of the format: input -> output

tenessee -> ohio

# if your node name has characters outside of a-zA-Z_, you need to

# quote them as strings

ohio -> "lower-mississippi"

"upper-mississippi" -> "lower-mississippi"

missouri -> "lower-mississippi"

arkansas -> "lower-mississippi"

red -> "lower-mississippi"

The given network can be loaded and visualized using svg_save function.

network load_file("./data/mississippi.net")

network command("mkdir -p output")

network svg_save(

"./output/network-mississippi.svg",

label="[{INDEX}] {_NAME:repl(-, ):case(title)}",

bgcolor="gray"

)

Results:

You can assign different graphical properties through node properties.

network load_file("./data/mississippi.net")

node[red].visual.nodecolor = "red";

node[ohio].visual.linecolor = "blue";

node[ohio].visual.linewidth = 3;

node["upper-mississippi", red].visual.nodesize = 8;

node[red].visual.nodeshape = "triangle";

node["upper-mississippi"].visual.nodeshape = "ellipse:0.5";

network svg_save(

"./output/network-mississippi-colors.svg",

label="[{INDEX}] {_NAME:repl(-, ):case(title)}",

bgcolor="gray"

)

Results:

Expression

Expressions are airthmetic or logical operations. They can appear inside the conditional statements, or as input to a task, or nested in other expression or function calls.

Expressions are defined into the following categories:

Literal Values

env [1, true, "no maybe"]

Results:

[1, true, "no maybe"]

Variable

env.value = [1, true, "no maybe"];

env.value

Results:

[1, true, "no maybe"]

Variables also have a “check” mode, where it returns true if variable exists, false if it does not.

env.value = [1, true, "no maybe"];

env value?

env other_var?

Results:

true

false

You can also use use varible from node, or network in other context. For example:

env.value = [1, true, "no maybe"];

network echo(json(env.value))

Results:

[1, true, "no maybe"]

Special variable types like nodes, inputs, output are available besides env, network and node based on what type of task the expression is on.

You will learn more about this on Cross Context Functions and Variables chapter.

Unary Operator

env !true

env - 12.0

Results:

false

-12

Binary Operator

env (12 > 34) & true

env "x" in "xyz"

env 12 in [123, true]

env "my name is" match "^my.*"

Results:

false

true

false

true

If Else

env if(!true) {"if true"} else {"if false"}

Results:

"if false"

Function

env.value = [1, true, "no maybe"];

env get(value, 2)

Results:

"no maybe"

Out of all expressions, only the function is not garanteed to return a value. If you are using a function expression and expect a value and it does not return it, it’ll be a runtime error.

env echo("Hello world!") + 12

*Error*:

Hello world!

Function echo did not return a value

Special function types like nodes, inputs, output are available besides env, network and node based on what type of task the expression is on.

You will learn more about this on Cross Context Functions and Variables chapter.

String Template

String templates are strings with dynamic components that can be rendered for each node based on the node attributes.

A simple template can be like below:

Hi, my name is {name}, my address is {address?"N/A"}.

I wrote this document on {%A}, exact date: {%Y-%m-%d}.

Results (with: name=John; address=123 Road, USA):

Hi, my name is John, my address is 123 Road, USA.

I wrote this document on Wednesday, exact date: 2025-08-06.

With more complicated templates, we would be able to generate documents with text and images based on the node attributes as well.

For example the following template can be used to generate a table.

| Name | Index |

|------------------|---------|

<!-- ---8<--- -->

| {_NAME:case(up)} | {INDEX} |

<!-- ---8<--- -->

network load_file("./data/mississippi.net");

network echo(render_template("./data/example.template"))

Results:

| Name | Index |

|---|---|

| LOWER-MISSISSIPPI | 0 |

| UPPER-MISSISSIPPI | 1 |

| MISSOURI | 2 |

| ARKANSAS | 3 |

| RED | 4 |

| OHIO | 5 |

| TENESSEE | 6 |

Of course, there are better ways to generate table than this, but this shows how flexible the template system is.

Node Function

Node function runs on each node. It takes arguments and keyword arguments.

For example following node function takes multiple attribute names and prints them. The signature of the node function is print_attrs(*args).

network load_file("./data/mississippi.net")

node print_attrs("INDEX", name=false)

Results:

INDEX = 0

INDEX = 1

INDEX = 2

INDEX = 3

INDEX = 4

INDEX = 5

INDEX = 6

Only the NAME is printed as they do not have any other attributes.

Node Function Propagation

Propagation of the node functions refer to the order and selection of nodes to run the function on.

By default node function is run at each node. But that might not be the intended use of the function, for example you might want to:

- run the function only on nodes that satisfy a condition,

- run the funciton only on a group of nodes,

Or you might want to change the order of the node function execution. Like running input nodes before the output node, if your function/analysis needs that.

These things are done with 3 syntax in the node function:

Order

You can run functions in different orders:

sequential/seq=> based on node indexinverse/inv=> inverse based on node indexinputsfirst/inp=> input nodes before outputoutputfirst/out=> output node before inputs

network load_str("a -> b\n b ->d \n c -> d \n d -> e")

node<seq> array(INDEX, ORDER)

node<inv> array(INDEX, ORDER)

Results:

{

e = [0, 5],

d = [1, 4],

c = [2, 1],

b = [3, 2],

a = [4, 1]

}

{

a = [4, 1],

b = [3, 2],

c = [2, 1],

d = [1, 4],

e = [0, 5]

}

Currently, inp and inv are equivalent, while seq and out are also equivalent. But when the parallization is added in the future versions, they will be implemented differently. So for backward compatibility, if you function needs to be run in a certain way, always use that one.

Here an example showing how to calculate the order of the node.

network load_str("a -> b\n b ->d \n c -> d \n d -> e")

node<inp>.val = sum(inputs.val) + 1;

node array(val, ORDER)

Results:

{

e = [5, 5],

d = [4, 4],

c = [1, 1],

b = [2, 2],

a = [1, 1]

}

If you do not use the inputsfirst propagation here, you get an error because the val attribute doesn’t exist in inputs, and if you did have that variable already, it would be old data instead of the recent results from your expression.

network load_str("a -> b\n b ->d \n c -> d \n d -> e")

node.val = sum(inputs.val) + 1;

node array(val, ORDER)

*Error*:

Attribute not found

NOTE: I need to work on better error messaging. It is in TODO list for the next major release.

Node List/Path

You can selectively run only a few nodes based on a list, or a path.

Given this network:

List of Nodes

List of node contains a separated list of node names or quoted string if the name is not valid identifier/number inside [].

network load_file("./data/mississippi.net")

node[tenessee,"lower-mississippi"] print_attrs("NAME")

Results:

NAME = "lower-mississippi"

NAME = "tenessee"

Path of Nodes

Path of node has the same syntax as a path used in the network file. It has starting node and end node. Instead of it representing a single edge like in network file, it represents all the nodes that are between those two (inclusive).

network load_file("./data/mississippi.net")

node[tenessee -> "lower-mississippi"] print_attrs("NAME")

Results:

NAME = "tenessee"

NAME = "ohio"

NAME = "lower-mississippi"

As we can see in the diagram, the path from tenessee to lower mississippi includes the ohio node.

Logical Condition

Logical condition is used by putting an expression that evaluates to a boolean value inside the ().

network load_file("./data/mississippi.net")

node("mississippi" in NAME) print_attrs("NAME")

Results:

NAME = "lower-mississippi"

NAME = "upper-mississippi"

Combination

You can combine the three different types of propagations in a single task using the syntax node<...>[...](...), they must come in that sequence as the order is decided first, then the list/path is taken, and finally the condition is evaluated to boolean before selecting the nodes.

For example:

network load_file("./data/mississippi.net")

node[tenessee -> "lower-mississippi"]("mississippi" in NAME) INDEX

node<inv>[tenessee -> "lower-mississippi"] INDEX

node<inv>[tenessee -> "lower-mississippi"](ORDER > 1) INDEX

Results:

{

lower-mississippi = 0

}

{

lower-mississippi = 0,

ohio = 5,

tenessee = 6

}

{

lower-mississippi = 0,

ohio = 5

}

Network Function

Network function runs on the network as a whole. It takes arguments and keyword arguments. Few network functions we have been using throughout the examples are load_file, load_str and svg_save:

network load_file("./data/mississippi.net")

network command("mkdir -p output")

network svg_save(

"./output/network-mississippi-sdf.svg",

label="[{INDEX}] {_NAME:repl(-, ):case(title)}",

bgcolor="gray"

)

Results:

For example following network function takes file path as input to save the network in graphviz format:

save_graphviz(

outfile [PathBuf],

name [String] = "network",

global_attrs [String] = "",

node_attr [Option < Template >],

edge_attr [Option < Template >]

)

Note that, if the arguments have default values, or are optional, then you do not need to provide them.

For example, you can simply call the above function like this.

network load_file("./data/mississippi.net")

network save_graphviz("./output/test.gv")

network clip()

# the path link are relative to /src

network echo("./output/test.gv")

Results:

digraph network {

"upper-mississippi" -> "lower-mississippi"

"missouri" -> "lower-mississippi"

"arkansas" -> "lower-mississippi"

"red" -> "lower-mississippi"

"ohio" -> "lower-mississippi"

"tenessee" -> "ohio"

}

With extra commands you can also convert it into an image

network load_file("./data/mississippi.net")

network save_graphviz("./output/test.gv")

network command("dot -Tsvg ./output/test.gv -o ./output/test.svg")

network clip()

# the link path needs to be relative to this file

network echo("../output/test.svg")

Results:

Cross Context Functions and Variables

You can access variable and call functions based on their default context (e.g. node variable/function in a node task). Additionally, you can also access the variables or call functions in select few other context.

By default, if a function is not available, node/network task calls the environment function of the same name.

For example, here the sum and array functions are environment functions, while the count is a network function. When you use NADI IDE, it’ll show you which function is actually being called at the top of the editor.

network load_str("a->b")

network sum(array(count(), 1))

Results:

3

Besides this, you can manually call cross context variable/functions in the following ways:

Env and Network Variables/Functions

You can use env and network variables anywhere in the task system with the dot syntax.

network load_str("a->b")

env.var = 12;

network.sth = true;

env render("this is {x}", x = network.sth)

network str(env.var)

node array(network.sth, env.var, node.NAME)

Results:

"this is true"

"12"

{

b = [true, 12, "b"],

a = [true, 12, "a"]

}

Similary, env and network functions can be called anywhere. These functions cannot be mutable functions (change network internally).

Taking the previous example, if we use env function count, we get an error as the function arguments are different.

network load_str("a->b")

node network.count()

network sum(array(env.count(), 1))

*Error*:

{

b = 2,

a = 2

}

Error in function count: Argument 1 (vars [& [bool]]) is required

Node Variables/Functions

You can use node, inputs, output and nodes keywords to access node variables and functions from different contexts. nodes is valid in all tasks, while the other 3 are only valid in a node task and refer to the current node, input nodes and output node respectively.

network load_file("./data/mississippi.net")

env count(nodes._)

node inputs.NAME

Results:

7

{

lower-mississippi = ["ohio", "upper-mississippi", "missouri", "arkansas", "red"],

upper-mississippi = [],

missouri = [],

arkansas = [],

red = [],

ohio = ["tenessee"],

tenessee = []

}

You can call node functions not just for the node in the context, but also for input nodes, and output node:

Please note that the root node (outlet) of the network doesn’t have output node, so we need to skip that, which can be done through the output._? which is checking for the dummy variable _ in output, which is true if the node has an output.

network load_file("./data/mississippi.net")

node[tenessee -> "lower-mississippi"] inputs.render("{_NAME}")

node[tenessee -> "lower-mississippi"](output._?) output.render("{_NAME}")

Results:

{

tenessee = [],

ohio = ["tenessee"],

lower-mississippi = ["ohio", "upper-mississippi", "missouri", "arkansas", "red"]

}

{

tenessee = "ohio",

ohio = "lower-mississippi"

}

You can also use nodes keyword to call the function on each node, it can be used anywhere, but is useful for env and network tasks.

network load_file("./data/mississippi.net")

env nodes.render("Node [{INDEX}] {_NAME}")

Results:

["Node [0] lower-mississippi", "Node [1] upper-mississippi", "Node [2] missouri", "Node [3] arkansas", "Node [4] red", "Node [5] ohio", "Node [6] tenessee"]

Plugins

Plugins are the main source of functions in the task system. There are two types of plugins,

- internal plugins: come with your nadi distribution, and

- external plugins: you can install them using shared libraries.

The functions available from the internal plugins are available in any format. You can access the functions with their name, or with plugin.name syntax.

External plugins are loaded from the directory in NADI_PLUGIN_DIRS environmental variable. If the shared library in that directory does not match the specification of NADI plugin, is compiled using different version, or compiled with different internal data structures, it will print warning and skip that file.

Security

Plugins can run arbitrary code, so users are expected to be careful while loading plugins by making sure it is not malicious.

Rust plugins give option to look at the code used to build the function, but it is only intended to be helpful, a malicious actor can still obfuscate, omit, or show different code than the actual function.

Furthermore, plugins can also replace functions if they have same name, look out for the warnings while running nadi.

Expect these Changes in the Future Versions to make the plugins system more secure in terms of function replacement problem:

- internal plugin names will be uppercase, and external lowercase, so that external plugins are not mistaken as internal ones, and no overwriting functions,

- external plugin functions always need to be accessed with dot syntax (

plugin.function(...)). And so that external functions are not called accidently.

This still will not solve the first problem, so always be vigilant using plugins. It is recommended to only use plugins developed in house, or those that you have source code of and can compile it yourself.

Further Reading

If you need help on any functions. Use the help as a task. You can use help node or help network for specific help. You can also browse through the function help window in the nadi-ide for help related to each functions.

help node render

Results:

node render (template: '& Template', safe: 'bool' = false)

Render the template based on the node attributes

# Arguments

- `template: & Template` String template to render

- `safe: bool` [def = false] if render fails keep it as it is instead of exiting

For more details on the template system. Refer to the String

Template section of the NADI book.

```task

network load_str("a -> b")

node.x = 13

node assert_eq(render("abc {x}"), "abc 13")

```

Or you can use nadi --fnhelp <function> using the nadi-cli.

Now that you have the overview of the nadi system’s data structures. We’ll jump into the software structure and how to setup and use the system.

If you want more details on any of the data structures refer the Developer’s references, or the library documentation.

Learn by Examples

This section teaches you the basics of the NADI Task System’s syntax with small examples. If you want a higher level example that focuses on the actual research problem instead of syntax then refer to the “Example Research Problems” Section on the sidebar.

For example data download the zip file here

| Topic | Learn About |

|---|---|

| Attributes | Setting and Getting Attributes |

| Control Flow | Control flow, if, else, while loops etc |

| Connections | Loading and modifying connections |

| Counting | Counting nodes in network, conditional |

| Cumulative | Calculating Network cumulative sums and those |

| Import Export | Importing and exporting multiple data formats |

| String Template | Using String Templates to do various things |

Attributes

There are 3 kind of attributes in nadi. Environment, Network and Node attributes. as their name suggests environment attributes are general attributes available in the current context. Network attributes are associated with the currenly loaded network. and node attributes are associated with each nodes.

nadi has special syntax where you can get/set attributes for multiple nodes at once.

network load_str("a -> b\n b -> d\n c -> d\n");

# environmental attribute

env.someattr = 123;

env.other = 1998-12-21;

env array(someattr, other)

# network attribute

network.someattr = true;

network.someattr

# node attributes

node.someattr = "string val";

node.someattr

Results:

[123, 1998-12-21]

true

{

d = "string val",

c = "string val",

b = "string val",

a = "string val"

}

like you saw with the array function, variables used are inferred as the attributes of the current env/network/node task.

you can use attributes from outside of current task type in some cases like:

- env/network variables can be used anywhere

- node variables are valid in node tasks

- node tasks has special variables types like

inputsandoutput

network load_str("a -> b\n b -> d\n c -> d\n");

# environmental attribute

env.someattr = 123;

env.other = 1998-12-21;

# network attribute

network.someattr = true;

# using network attr in env task

env array(network.someattr, other)

# using nodes in network task

network nodes.NAME

Results:

[true, 1998-12-21]

["d", "c", "b", "a"]

Similarly inputs:

network load_str("a -> b\n b -> d\n c -> d\n");

node inputs.NAME

Results:

{

d = ["b", "c"],

c = [],

b = ["a"],

a = []

}

Refer to the network diagram below to verify the output are correct:

network load_str("a -> b\n b -> d\n c -> d\n");

network svg_save("./output/attrs-simp.svg")

Results:

Control Flow

Task has some basic control flow required to write programs. They are if-else branches and while loops.

Conditional (If-Else) Blocks

There are two kind of if-else branches. One is on an expression level. which means there has to be if and else branch both as it expects a return value. The following example shows the expression with if-else block.

env.newvar = if (12 > 90) {"yes"} else {"no"};

env.newvar

Results:

"no"

Trying to do it without else block will result in an parse error as the program will error with a syntax error, for example the code below is invalid

env.newvar = if (12 > 90) {"yes"};

env.newvar

That’s when you can use the if-else block on the task level. This can be only if block as the execution blocks are tasks instead of expressions.

Here, since the condition is negative the task inside the block is never executed, hence env.newvar is empty.

if (12 > 90) {

env.newvar = "yes";

}

env.newvar

*Error*:

EvalError: Attribute not found

While Loop

While loop runs the tasks inside the block repeatedly while the condition is satisfied. There is an iteration limit of 1,000,000 for now just in case people write infinite loop. This is arbritary.

env.somevar = 1;

while (somevar < 10) {

env.somevar

env.somevar = env.somevar + 1;

}

Results:

1

2

3

4

5

6

7

8

9

This can be used to repeat a set of tasks for a various reasons.

Connections

Connections between the nodes is the most important part of nadi. you can load networks by loading a file or string. The network is a simple multiline text with one edge (input -> output) in each line. comments starting with # are supported.

Default is Empty Network

Tasks are run by default with an empty network. So you might still be able to work with network attributes, but the nodes will be empty. also note that when you load network it replaces the old one including the attributes.

network.someattr = 1234;

network.someattr

Results:

1234

But we can see the nodes are not there,

network count()

network nodes.NAME

Results:

0

[]